We propose a novel inverse rendering method that enables the transformation of existing indoor panoramas with new indoor furniture layouts under natural illumination. To achieve this, we captured indoor HDR panoramas along with real-time outdoor hemispherical HDR photographs. Indoor and outdoor HDR images were linearly calibrated with measured absolute luminance values for accurate scene relighting. Our method consists of four key components: (1) panoramic furniture detection and removal, (2) automatic floor layout design, (3) global rendering with scene geometry, new furniture objects, and a real-time outdoor photograph, and (4) editing camera position, outdoor illumination, scene textures, and electrical light. Additionally, we contribute a new calibrated HDR (Cali-HDR) dataset that consists of 137 paired indoor and outdoor photographs.

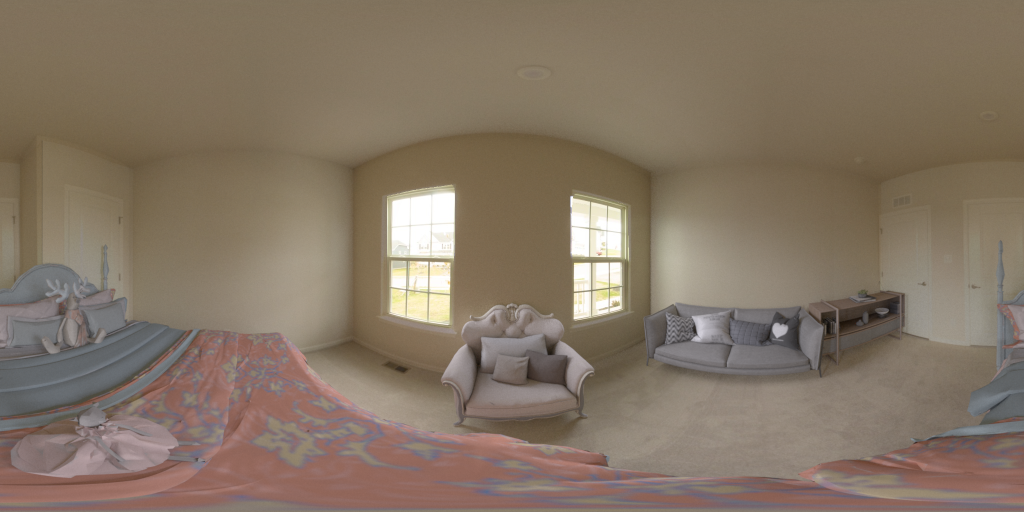

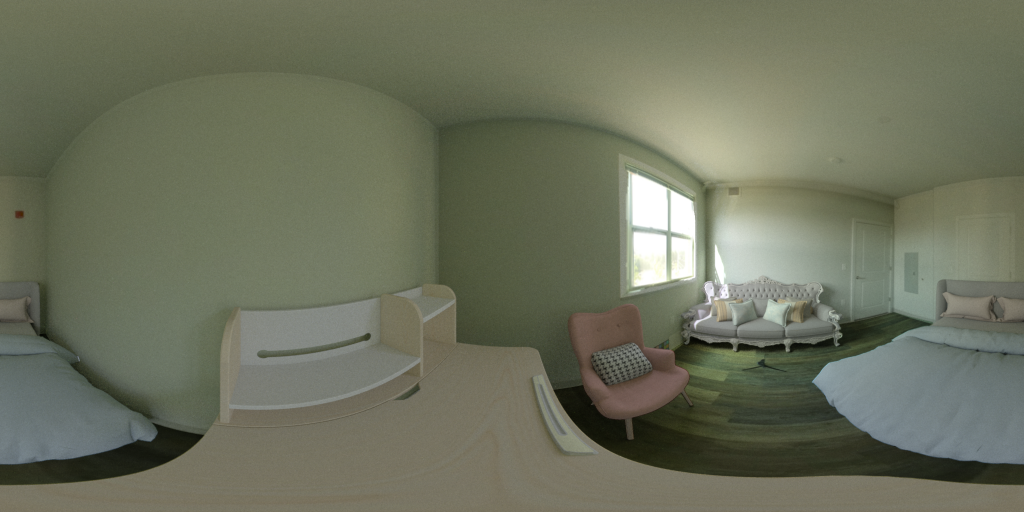

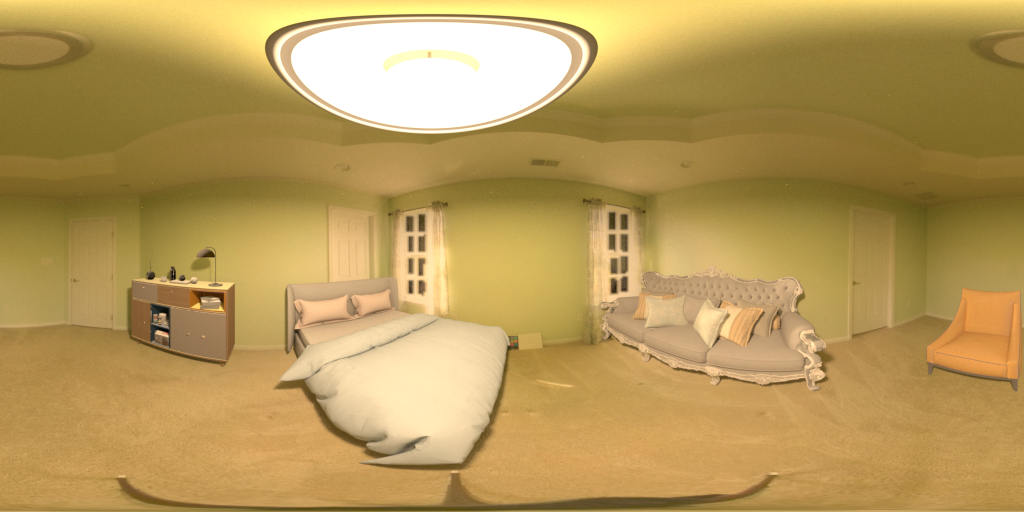

Illustration of Our Rendering Approach: A real-world scene is captured at 11:27 AM (cloudy sky condition). Different furniture objects are removed from the existing room, and the empty scene is refurnished with new virtual furniture objects. A new panorama is then virtually rendered with edited camera position, outdoor illumination at 01:00 PM (clear sky condition), scene textures, and electrical light.

We edit the existing scenes with new floor layout. For empty scenes, we refurnish the scene with new furniture objects. For the furnished scenes, we detect and remove the existing furniture objects, and then virtually render the scenes with new furniture objects.

The camera position for virtual staging can be flexibly customized inside the rooms. Our rendering method integrates complete 3D scene geometry (including both room geometry and furniture objects), outdoor environment map, and material textures. By changing the camera position, the scene will be rendered from different view positions.

When the camera position is closer to the window, the window can be opened up. The real-time outdoor scene can be observed from various indoor locations.

The 3D room geometry can be customized with new surface textures in the rendering model. In this example, we changed the wall painting and floor textures of the existing room.

In the real world, the outdoor scene changes throughout different times of the day. In this section, we focus on editing the direct illumination of the sun in the outdoor image, allowing the indoor scene to be rendered under varying sun positions. The figures above demonstrate the edited outdoor images under different weather conditions and at various time points.

In Rendered Scene 1, we add direct sunlight into the cloudy outdoor images, the scene is rendered with various sun positions during the day. In Rendered Scene 2, we adjust the sun position in the afternoon, allowing the indoor scene to showcase its changing appearance as the sun moves from early afternoon to late afternoon.

We achieved virtual staging for the night scene, when the virtual scene is only illuminated by electrical lighting with accurate spectra. The virtual scenes are rendered under different spectra and display unique indoor appearance.

we contribute a new calibrated HDR (Cali-HDR) dataset that consists of 137 paired indoor and outdoor photographs. In our work, we calibrate the captured HDR panoramas using absolute luminance value measured in each scene. This calibration ensures that our HDR images accurately represent realistic spatially varying lighting conditions. To access the dataset, please visit here.